Snowflake's COPY Command: The Key to Efficient Data Loading

The Power of Data Loading in Snowflake

In today's data-driven world, the ability to load large volumes of information efficiently and reliably has become a critical competency for any organization. Within the Snowflake ecosystem, few tools are as fundamental as the COPY command, a powerful functionality that forms the backbone of many data ingestion architectures.

As a data engineer with years of experience implementing enterprise solutions, I've witnessed firsthand how proper use of the COPY command can transform loading processes that previously took hours into operations that complete in minutes, while simultaneously improving data quality and reliability.

What is the COPY Command in Snowflake?

The COPY command in Snowflake is a SQL instruction specifically designed to load data from external files stored in cloud platforms into Snowflake tables. Its primary purpose is to provide an efficient, flexible, and controllable way to move large volumes of data.

Unlike traditional loading methods, COPY leverages Snowflake's distributed architecture to parallelize operations, resulting in exceptional performance even with massive datasets.

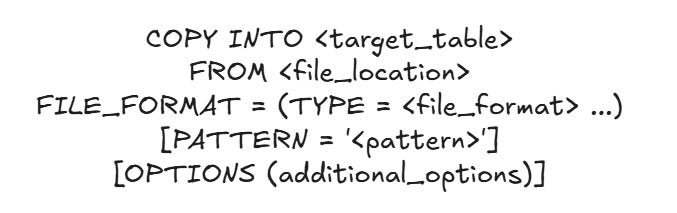

In its basic form, the command follows this structure:

COPY INTO <target_table>

FROM <file_location>

FILE_FORMAT = (TYPE = <file_format> ...)

[PATTERN = '<pattern>']

[OPTIONS (additional_options)]Main Components of the COPY Command

1. Destination (INTO)

Specifies the table or materialized view where data will be loaded:

Can be a standard, temporary, transient table, or materialized view

2. Source (FROM)

Defines where the files to be loaded are stored:

Amazon S3, Google Cloud Storage, Microsoft Azure, or Snowflake Stage

3. File Format

Defines how Snowflake should interpret the files:

Supported formats: CSV, JSON, AVRO, ORC, PARQUET, XML

Configuration options for delimiters, headers, null values, etc.

4. Patterns and Additional Options

Patterns to select file subsets

Configurations for error handling, maximum size, automatic purging, etc.

Modes and Variants of the COPY Command

The COPY command is versatile and adapts to different needs:

COPY INTO TABLE (Standard Load)

For loading data from external location to a table

COPY INTO LOCATION (Unload)

Allows extracting data from Snowflake to external storage

COPY with Transformation

Allows transforming data during loading using a SQL query

COPY Validation

For validating data without actually loading it

Integration with Snowflake Stages and Relationship with the PUT Command

Stages are designated locations where Snowflake can access data files, acting as intermediaries between external storage and tables.

Types of Stages

Internal Stages: Storage managed by Snowflake

External Stages: References to locations in cloud storage

User Stages: Private to a specific user

Temporary Stages: Available only during the current session

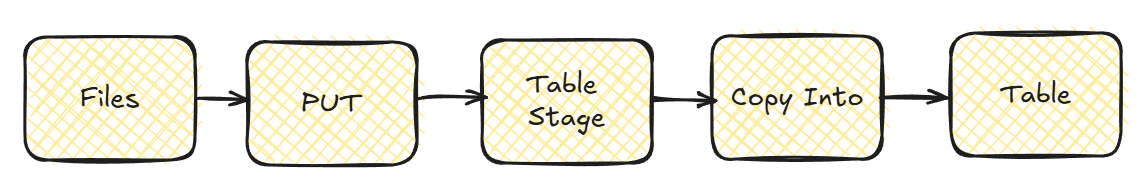

Relationship between PUT and COPY

It's important to understand the complementary relationship between the PUT and COPY commands:

PUT Command: Used to upload files from your local system to a Snowflake stage. It operates at the file system level.

COPY Command: Reads data from files already located in stages and loads them into tables. It operates at the data level.

In a typical loading flow from local files:

-- Step 1: Upload local file to an internal stage

PUT file:///C:/data/january_sales.csv @my_internal_stage;

-- Step 2: Load data from the stage into the table

COPY INTO sales_table

FROM @my_internal_stage/january_sales.csv

FILE_FORMAT = (TYPE = 'CSV', SKIP_HEADER = 1);For files already located in cloud storage (S3, Azure, GCP), you typically only need the COPY command with a properly configured external stage.

Loading Strategies for Different Volumes

Small Files (< 100MB)

Consider file consolidation

Possibly disable parallelism with

SINGLE = TRUE

Medium Volumes

Leverage default parallelism

Use columnar formats (Parquet) for better performance

Massive Data

Split the load into multiple parallel COPY commands

Use larger warehouses

Implement progress monitoring

Error Handling and Validation

The COPY command offers several strategies for handling errors:

ABORT_STATEMENT (default): Stops the entire operation upon any error

SKIP_FILE: Skips entire files containing errors

SKIP_FILE_N%: Skips files exceeding a specific percentage of errors

CONTINUE: Skips individual records with errors

The validation mode allows testing the load without inserting data:

COPY INTO customers

FROM @customer_stage

VALIDATION_MODE = 'RETURN_ERRORS';Common Implementation Patterns

Incremental Loading with Duplicate Control

Using control tables that record already processed files

Loading JSON Files with Nested Structure Processing

Using functions like FLATTEN to normalize nested data

Multi-Stage Loading Pipeline

Implementing raw, staging, and curated zones to progressively process data

Performance Optimization

Key Factors

Warehouse Size

Larger warehouses provide greater parallelism

Recommendation: Start with Medium (M) and scale as needed

File Format and Size

Columnar formats (Parquet, ORC) are more efficient

Ideal size: 100-250 MB per file

Compression

GZIP: Good compression, not splittable

SNAPPY: Less compression, better parallelism

ZSTD: Good balance

Optimization Tips

Consolidate small files before loading

Temporarily scale the warehouse for large loads

Divide massive loads by logical partitions

Integration with Snowflake Ecosystem

The COPY command integrates with other Snowflake capabilities:

Snowpipe: For automatic real-time data loading

Tasks: For automating scheduled loads

Streams: For incrementally processing loaded data

Best Practices

Pipeline Design

Implement multi-stage architecture (raw, staging, curated)

Establish clear retention policies

Design for recovery and re-executability

Security

Avoid hardcoded credentials

Apply principle of least privilege

Audit loading operations

Performance

Optimize file format and size

Balance resources according to volume

Parallelize strategically

Enterprise Use Cases

Retail

Processing millions of daily transactions from multiple stores, reducing latency from hours to minutes and providing near real-time visibility.

Financial Services

Consolidation of data from multiple systems for regulatory reports with strict validation requirements, ensuring compliance and traceability.

Healthcare

Loading and processing data from medical devices and electronic records, enabling near real-time analysis while maintaining privacy and security.

Conclusion

The COPY command is much more than a simple loading tool; it's a strategic component for modern data architectures in Snowflake. Its versatility, performance, and integration capabilities make it essential for any serious implementation.

The key to success lies in understanding its capabilities, applying best practices, and adapting it to the specific requirements of your use case.

Have you implemented solutions with the COPY command? What strategies have been most effective for you? I'd love to hear about your experiences in the comments section.